I’m currently adding a new feature to add web page sources, I got it up and running in a day, which was a relief after the delays in the last update.

However, it’s far from ideal, rendering is painfully slow and there doesn’t seem to be anything I can do about it. The trouble is Web Kit, which must be used in for web stuff in iOS or the app faces getting rejected from the app store. Web Kit only renders to a WKWebView, causing some problems for me. The WKWebView is pretty useless to me outside of editing the source, everything I do is eventually handled in openGL.

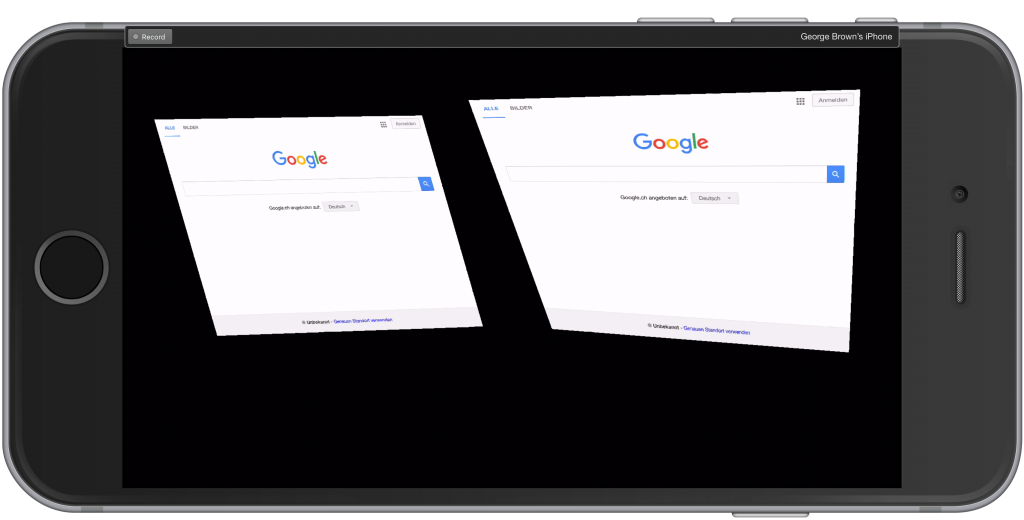

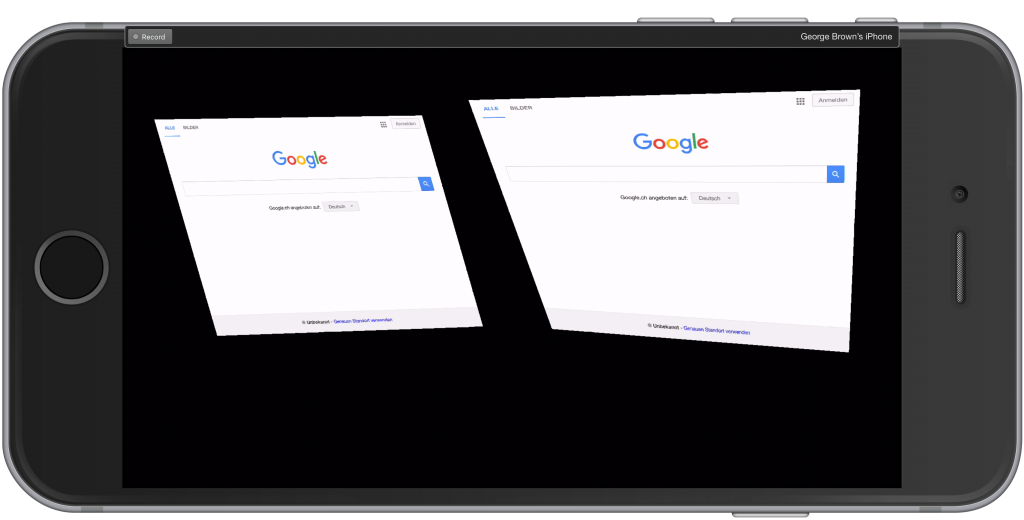

With no facilities to access the rendered web page outside of the WKWebView, I have to create an offscreen one. Next we have to go through a two step process to render to opengl. First, copy the view contents to a UIImage, then upload the UIImage to a texture. We’re supposed to use drawViewHierarchyInRect: afterScreenUpdates: as described in this Technical Q6A . Trouble is, this method is too slow to run every frame, it interferes with animation and can make the keyboard unresponsive.

OK, so can we do this in a worker thread and leave the main thread alone? Sadly not, even though my WKWebView is offscreen, the drawViewInHeirachy method will not render anything when called outside of the main thread, we can upload to texture asynchronously, but not the slow task of copying the WKWebView to a UIImage. None of the other methods such as renderInContext will work, I think I’ve tried every combination.

So at the moment I’m going to have to make some comprises. First thing I thought to try was to try and limit updates to when it’s absolutely necessary, such as when any part of the webpage redraws itself, but is there any way to detect and observe when the WKWebView is redrawn? no, no there isn’t. I tried drilling down the layers in the WKWebView, of which there are many, and it eventually seems to drill down to layers backed by IOSurfaces. I suspect this underlying IOSurface is what’s being rendered to, and if this was OSX, we could easily copy it or bind it to a texture, but in iOS in the app store, the APIs related to iOSurface are private and can not be used, so no luck there.

We can monitor navigation, but just because the navigation has been completed, doesn’t mean the screen has been redrawn yet. I’m currently looking into a solution by injecting Javascript and detect when anything changes, but from what I’m reading while looking into this solution, we still need to allow a delay for when something is done to when it is rendered on screen, it might bring some improvements to webpages with little animation, but ultimately we’re still going to have problems with websites that have animations or video in them.

For now I think I’m going to have a combination of the user setting a refresh rate, somehow looking for changes to the rendered web page to only refresh when needed, and for case where the user wants fast refresh, then we need facilities to suppress the more cpu intensive actions while were animating from view to view or have some other main thread intensive process. All in all, it’s not going to be as good as I would like it. Still, it will be something to work on in the future.