Well had a late night going through my openGL code line by line, reworking a few things from the top down, simplfying code, reconsidering a few things and generally tidying things up and it’s fixed my problems. Just submitted the app for review now, hopefully it should go through in a week or so (According to http://appreviewtimes.com/).

All posts by George Brown

iOS release delay

My latest iOS release suffered a setback, a few issues came up during testing, mostly minor and now fixed, but I’ve run into a snag with older iOS devices that don’t support OpenGL ES3, the code should work in theory, but a certain combination is refusing to cooperate in ES2, as soon as I track down the problem it will be released to Apple for review.

Updates

Just a quick update as we approach Christmas, been a bit busy visiting family and also got myself run down by flu last week, so development took a bit of a hit the last few weeks. Just got back into things the last couple of days with renewed vigour and some late night coding sessions.

I’ve almost finished the next iOS update, which will add the text functionality that I put in the OSX version, that’s pretty much wrapped up now. I’ve got to address a couple of bugs that seemed to have popped up with a recent iOS update and improve some things with detecting external devices.

While I seem to be in full dev mode at the moment, I’ve got personal plans for the next few days, I might be able to wrap things up by tomorrow and send it to Apple for review, but I doubt it’ll make it through the review process till the New Year, but I have no idea what apple are like during the holiday season.

I will keep you all posted, and if this is the season for you, enjoy your holidays!

New version out

Just put out the latest version for OSX, just realised my point release system is a little screwed up, probably should be version 1.2 with a new feature, or just go with a build number which seems to be popular too, especialy in these days of continual releases.

I’ll start on the iOS update next, the sources story will need a little rethink to add stuff not just from the camera roll, and a think of how to let the user use the text option, but most of the drawing code should still work I think.

Reality Augmenter Release Notes 1.1.2

New Features

- Text Support, you can now create and alter text in the application, support for all osx supported fonts, and has an option to scroll the text with and adjustable width to control aspect ratio. Can be used straight, or combine other sources using text as a mask for eyecatching text.

Other

- Fixed table views not looking quite right in El Capitan.

- Renamed some of the sources to remove rendundancy in the names.

- More testing coverage.

Working on next versions

I’m currently busy on implementing a new Text feature for the Reality Augmenter, it’s been a bit harder than I expected. I was expecting the actual OpenGL rendering to be the tricky part, but actually wasn’t too difficult. However, I hadn’t properly thought out the user interface, causing me some unexpected problems with the font system and how I was going to let the user configure the functionality.

Anyway, it’s nearly finished now, so a new update for OSX should be coming soon, and a new version for iOS a few weeks after that (depending on the review process).

New version for OSX

Well I just finished rolling back all my new iOS features (images and custom masks) into OSX and generally cleaned up the code. I also did a lot of work extending my testing coverage over more of the core functionality after using XCode’s new code coverage feature.

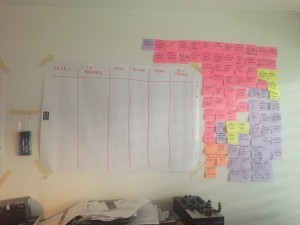

Above you see my SCRUM Board, currently clear after finally consolidating the iOS app fully into my master source branch. I like having the board even though I work alone at home, it helps me to break down jobs and makes me think about exactly what each version will contain. It’s also a good reminder to work on the project.

TBH though, as it’s just me, I can get a little lax, I’ll put one note down for a new feature that’s actually about ten individual tasks for example, or I’ll suddenly start refactoring a huge bunch of code just to make one task simpler. The scrum board helps keep it all under control, especially for things like feature creep. As a sole developer, it’s quite hard to decide what features to include in an app for any release. You keeping getting ideas for new features and updates, and as you work on an app of your own making, it’s very tempting to just start working them in, pushing a final product or release ever back. Taking time to plan what you have for each release, making up all the tasks and laying them out on a board makes the whole thing look a lot more manageable. New features and updates can be put aside while still being considered in the coding of the current release.

Reality Augmenter Release Notes 1.1.1

Long delay this time, updates were suspended while iOS development was completed. iOS version now released, and this means some new changes for the OSX version that were implemented in iOS.

Unit testing has been greatly extended to cover more core functionality, including the rendering, this picked up some unusual problems that required fixing, further delaying update, but will bring greater benefits in the long term.

New Features

- Image support, most image formats can now be imported and used as sources.

- Processors and Sources can now be used as masks, this means you are no longer restricted to circle masks and can use videos, images, syphon, QC and screencapture as inputs. A circle mask is still created an maintained in the application.

- Mask invert and threshold, as part of the mask updates, individual layers can now invert masks, and define a threshold for mask coverage in greyscale.

Other

- Fixed occasional rendering glitches picked up by unit testing.

- Layer updates to setting/unsetting masks, turning layers, masks and overlays on or off are now more reliable and responsive.

- General code clean and cleaner organisation as part of iOS shared code base.

New XCode 7 Testing Facilities

I’m updating Unit Testing for the next OSX release and see a couple of new features in XCode.

Testing code coverage is nice, it was available before, but wasn’t the easiest to implement. It’s now built in and can be turned on from the Schemes editor, and reports turn up under the reports tab.

The actual code coverage report could be presented better, there doesn’t seem to be any order to it. My coverage isn’t too bad on the underlying model and rendering facilities, but I see some stuff I’ve missed. It also shows classes that I’ve forgotten altogether, while a couple are things I’ve overlooked or put off, A lot of them are to do with my User Interface.

Which brings me to the next added feature in XCode, UI testing. I know there are companies that provide UI testing for xcode, I didn’t see a free one… So I’d been testing manually, but finally it’s been added to XCode 7. I’ll be looking at implementing that too in the future if it looks like it’ll work.

Working on next release for OSX

I’m currently porting some features of the iOS version of the Reality Augmenter back into OSX. This includes the ability to load images, and use custom masks made from any of source types.

So no longer will you be limited to the circle mask for projection mapping in OSX, you’ll be able to use videos, images and quartz compositions to mask your mapped areas.

Next release should be very soon, just tidying up a few odds and ends and need to make sure testing is up to date.